You create training, educational, and support content to foster changes in behavior. Whether shifting the way your customers use your product, helping coworkers rethink processes, or assisting a customer with a product problem, your content should have a discernible effect on how people do things. This goes for both in-person and online training.

The best training content does this quickly and efficiently, allowing customers and colleagues to get on with their days and keep work moving.

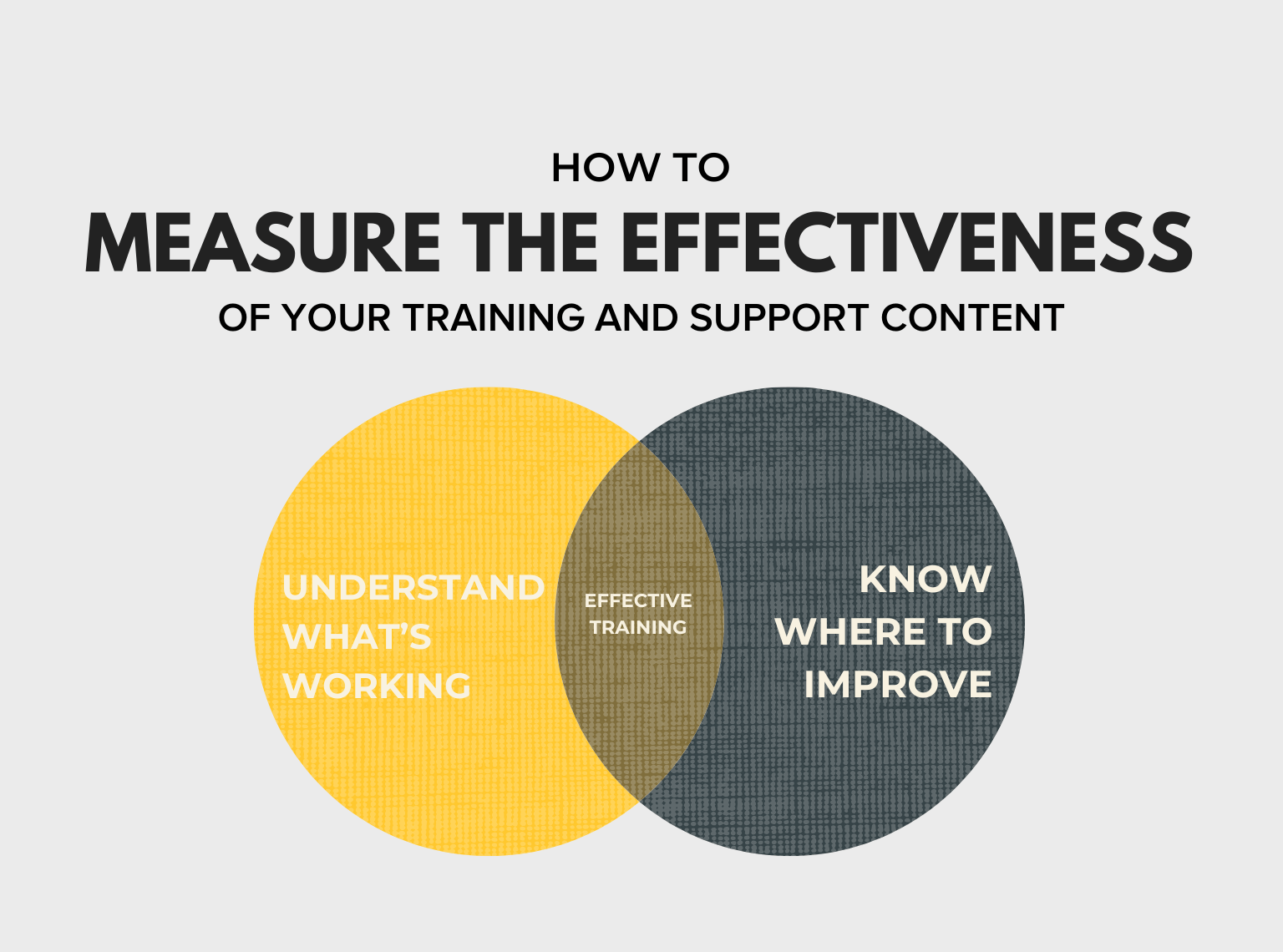

But how do you measure training and support effectiveness?

For TechSmith Academy, we spoke with some of the leading experts in training to find out how they measure their own effectiveness. They agree that you must learn to measure your content’s effect on the audience’s behavior to understand what’s working — and where you can improve.

They also offered a number of tips you can start using right now to begin analyzing your content’s effectiveness.

Watch TechSmith Academy – For Free!

TechSmith Academy is a free online learning platform with courses to help you learn more about visual communication and video creation.

Start with a baseline

You can’t measure your training content if you don’t know where things stand now. That means starting with a baseline.

This can include already-established metrics such as customer data, employee performance, or — in the case of things that can’t be easily measured — any anecdotal evidence, customer complaints or suggestions, etc.

As Cindy Laurin notes, “Understand what the outcome is that you’re looking for … so that whatever you build is going to actually fulfill what you’re trying to accomplish to begin with.”

While this may not be easy, there are ways to get information that can help you set a baseline.

As Kati Ryan notes, you may need to get creative.

“Sometimes it’s qualitative. And so it’s exit interview data, or is it being mentioned?,” she said. “Then, you can measure an uptick based on the learning.”

Remember that your business likely tracks a lot of metrics that can be leveraged to measure training effectiveness.

Toddi Norum believes this is a great way to measure success.

“If you see improvement in something that was previously monitored or tracked as a problem, then you know the training probably had something to do with that,” she suggested.

However, she cautions, you should be able to align training activities with the analytics.

Find out what needs to change

Once you have your baseline data, focus your content on the changes you want to see.

As Tim Slade puts it, “What is it that people are doing, what aren’t they doing, and what’s the reason why?”

Once you know the answers to these questions, you can create content focused on how to change those behaviors. Ultimately, it’s not about what you want them to know, but what you want them to do.

“Then, figuring out whether your training is working or not is pretty simple, because it’s just a matter of then “Then, figuring out whether your training is working or not is pretty simple, because it’s just a matter of then answering, are they doing it now or not?,” he said.

Get feedback from participants

With your baseline in hand, and after implementing a training plan, it’s time to measure the effectiveness of your efforts.

We often assume that measuring success has to have hard numbers attached to it to be valid, but our experts noted that there are a lot of great ways to determine whether or not content accomplished your goals that don’t necessarily conform to that assumption.

Many, including Trish Uhl, recommend talking to your audience to find out what they think.

“Put it into the hands of the people that need to consume it and then ask them,” she said.

At first glance, some may think this is too anecdotal to be a truly effective strategy, but over the course of time, by collecting enough feedback, you may see patterns in responses that can help you get a clear picture of your content’s strengths and weaknesses. This is especially useful when you combine it with quantitative metrics as well.

Remember, it’s okay to be wrong (as long as you adapt)

People in the software industry are familiar with the idea of failing fast and adapting to produce the best possible result, but for others, failure can feel like a big deal. Especially when you’re creating content for customers.

However, figuring out what works and what doesn’t is a process, and often requires making mistakes and learning from them. Rome wasn’t built in a day!

“Be willing to be wrong,” she said. “Be willing to be like, ‘Okay, this was an experiment, and I thought it was going to produce this kind of result.”

And, if it doesn’t, be ready to tweak it — or, in extreme cases — let it go and start over.

The Kirkpatrick Model for measuring training

While the experts we interviewed didn’t specifically mention the Kirkpatrick model, no discussion of training and customer education content would be complete without at least noting its existence.

And, much of what our experts said can be found in this model, which reinforces its importance.

The Kirkpatrick model was developed in the 1950s by University of Wisconsin professor Donald Kilpatrick. It provides a simple, four-level approach to measuring the effectiveness of training and educational content.

I won’t go into too much detail here, but here are the basics:

Level 1 — Reaction

Measures how quickly learners have reacted to the training, as well as how relevant and useful it is. Uses surveys and other direct feedback methods.

Level 2 — Learning

How much knowledge did learners acquire from the training? Uses test scores, business metrics, and other hard data sources.

Level 3 — Behavior

How has the training altered behavior and performance? Uses questionnaires, peer and manager feedback, job performance KPIs, and more.

Level 4 — Results

Measures tangible results, such as reduced costs, improved quality, increased productivity, etc.

You can see pieces of the tactics suggested by our experts in these methods. For more information on the Kirkpatrick model, check out this blog.

There’s no one way to measure your results

From hard numbers and stats to simple audience feedback, there are a lot of ways to measure training effectiveness. The most important thing is that you are measuring it and then acting on what you find. Reinforce methods that work and alter those that don’t.

If you haven’t checked out TechSmith Academy, now is a great time to give it a try. From incredible tutorials on improving video content to more interviews with experts, TechSmith Academy is a TOTALLY free learning tool to help you take your customer education, tutorial, and training videos to a whole new level.

Watch TechSmith Academy – For Free!

TechSmith Academy is a free online learning platform with courses to help you learn more about visual communication and video creation.

Frequently asked questions

There are many ways to measure your training program. Consider both quantitative and qualitative metrics, such as analytics and customer feedback. Often, taking a look at both can help you get a better understanding of employee or customer experience with your training than one alone.

Use feedback from participants to help figure out which parts of the training went well and which didn’t. Then, you can use that information to build even stronger content. The important thing is that you “fail forward” and use any shortcomings as learning experiences.

Share